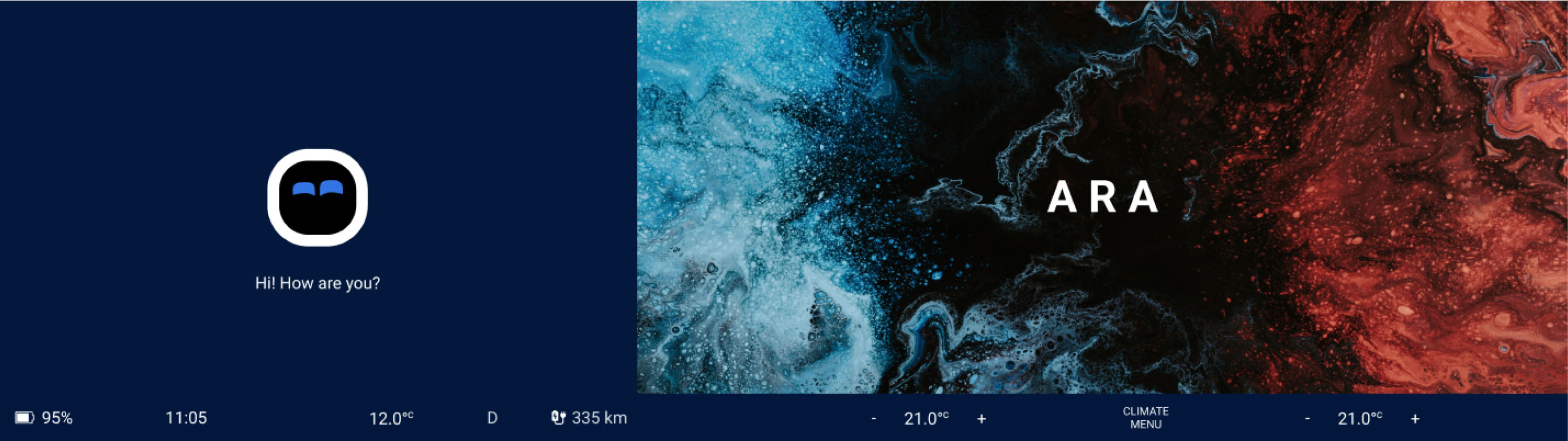

ARA

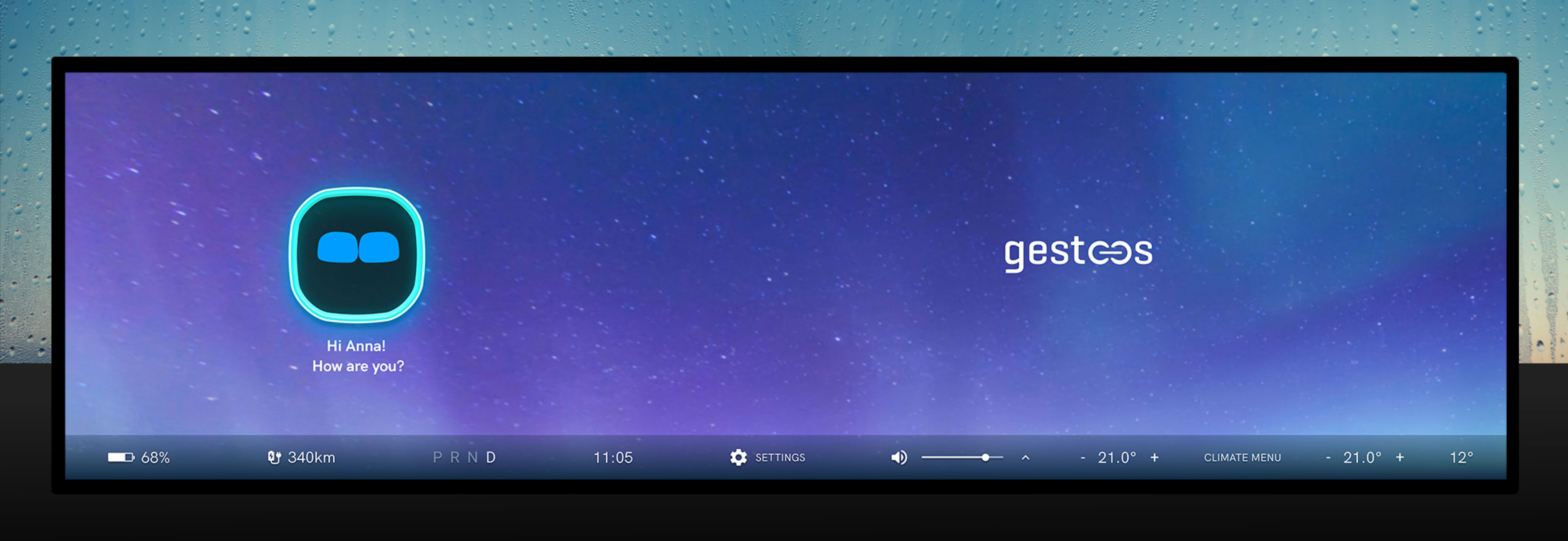

Gestoos AI assistant and multimodal infotainment system for Level 4 autonomous vehicles restores the ageing generation’s freedom of independent travel while promoting trust through the in-car experience design.

Date

Jan 2021 - Jun 2021My Role

UX / UI Design Lead, Project LeadTeam

Anna Nguyen, Rachel Iczkovits, Alberto PinardiStakeholders and advisors

-Germán León, CXO and Founder of Gestoos-Pascal Landry, UX Researcher and Developer at Gestoos

-Rudolf Hemmert, Global Manager Technical Sales & Business Development, Advanced Safety & User Experience at Aptiv

-Maximilian Martin, Business Analyst, Advanced Safety & User Experience at Aptiv

-German Autolabs

-Sudha Jamthe, IoT Disruptions CEO and expert in Autonomous Vehicles and Artificial Intelligence

-Christopher Deutschler, Product Marketing Manager at BioIQ and former Voice of Customer Specialist at Mercedes-Benz

1.0. Overview

I was UX / UI Design Lead and Project Lead in a Masters in User Experience Design thesis project for a 2030 concept AI assistant and multimodal infotainment system for Level 4 autonomous vehicles.

Aside from leading the team in all stages, my individual contributions to the project were:

-Main point of contact for the stakeholders

-Developed and created the style tile which conceptualized the visual direction for our high-fidelity design

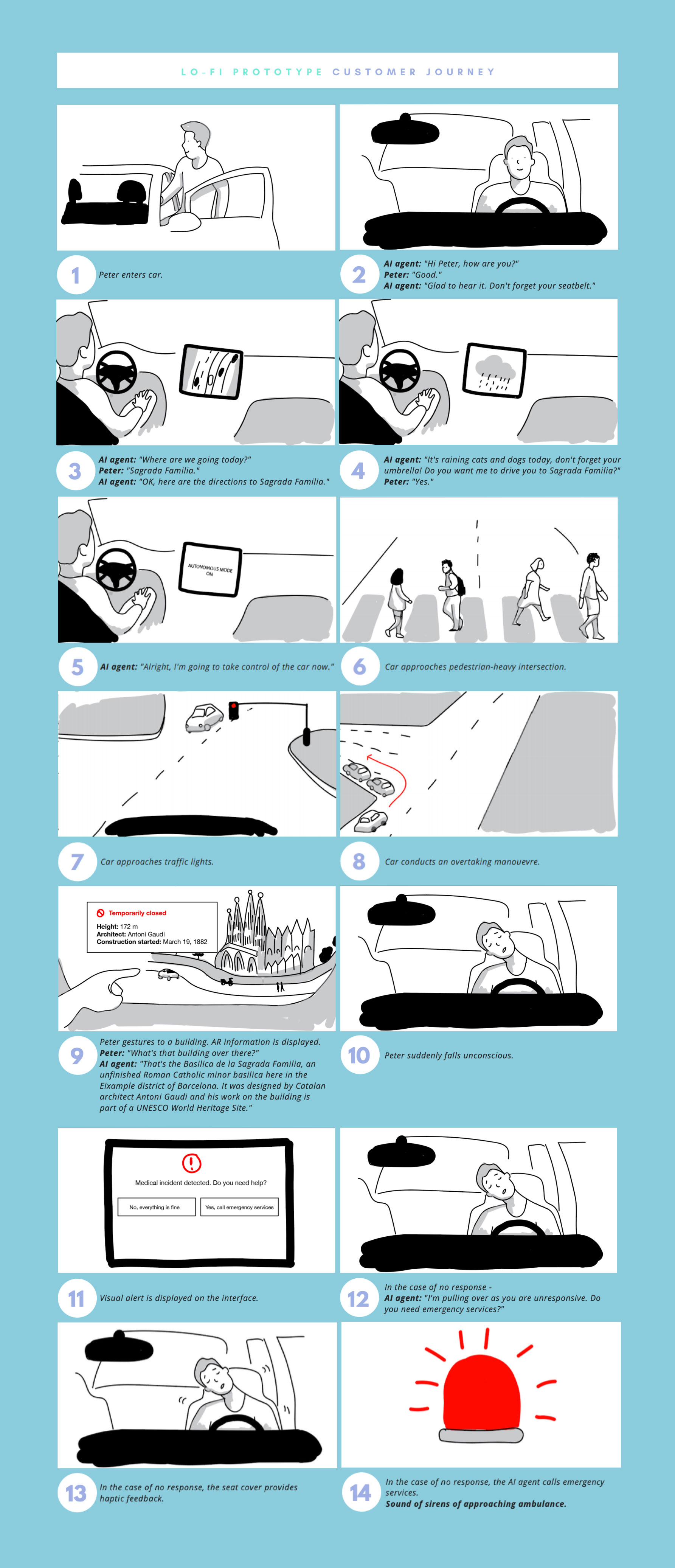

-Illustration of customer journey storyboard

-Planning and filming of the car journey for the user test simulation video

-Created the interactions for the high-fidelity prototype

-AI avatar design and animation

-Animation and visual design for the digital marketing video

-Presented the project to 20 people from Gestoos and Aptiv

-Presented the project to a panel of 4 judges: Jorge Márquez Moreno, Head of User Experience Design Europe at everis, Smailing Ventura, Chief UX / UI Designer at everis, Paula Mariani, UX Director and Founder of To Be Radiant, Roberto Corrales, Product Designer at Wallapop

2.0. The challenge

Empowering the ageing generation

Level 4 autonomy refers to vehicles that can drive completely by themselves within a limited area and can be manually overridden. Our brief was to create a concept AI assistant and multimodal infotainment system and we were first tasked with finding a problem and use case of relevance to address in the autonomous vehicle area.

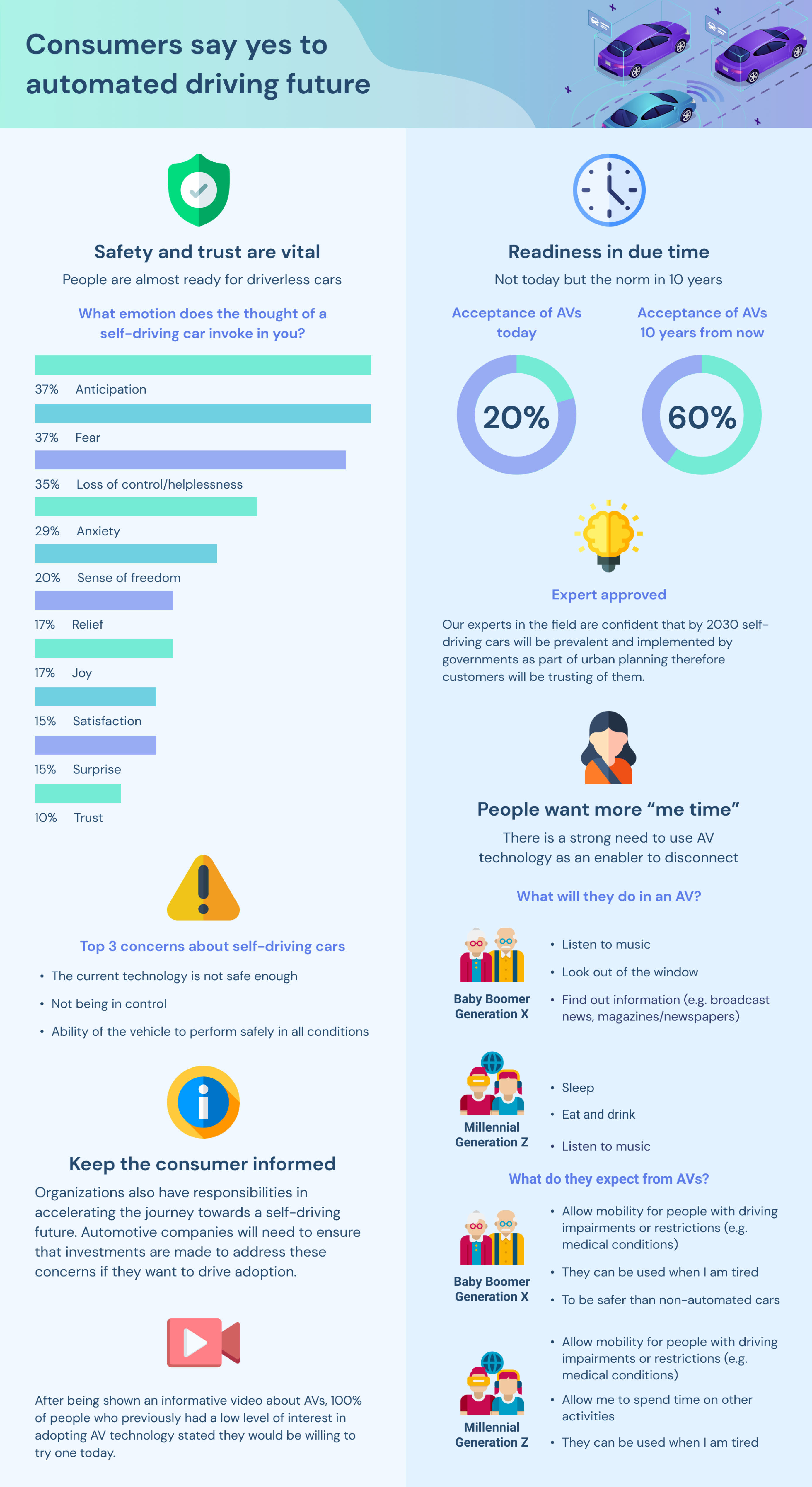

As result of our exploratory research, we discovered that in 2030, although they will still want to travel, more and more Baby Boomers and Generation Xers will depend on others to assist them in getting around. As such, in 2019 it was estimated by the European Statistical Office that 40% of 65–74-year-olds experience limitations in usual activities due to health problems. With the latest development in AI, automated mobility solutions can be a great avenue to overcome this issue.

Yet, adoption of these novel technologies is facing several challenges. Our research showed that many people today would choose a human-driven car over a self-driving car, and they do not trust autonomous mobility because of perceived lack of safety in the technology and feelings of helplessness and loss of control.

3.0. The solution

We designed a voice assistant and infotainment system for self-driving cars with the vision of revolutionising an ageing generation’s mobility experience by restoring their freedom of independent travel while promoting trust through the in-car experience design. We believe in giving people the choice of voice, touch, gaze, and gestures to interact with the AI of the car.

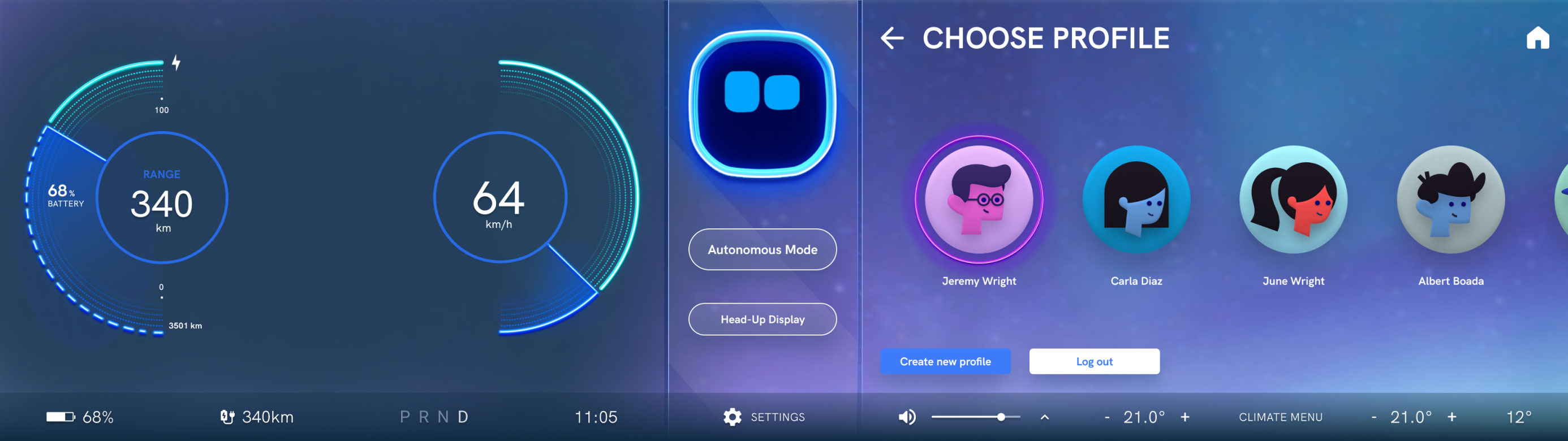

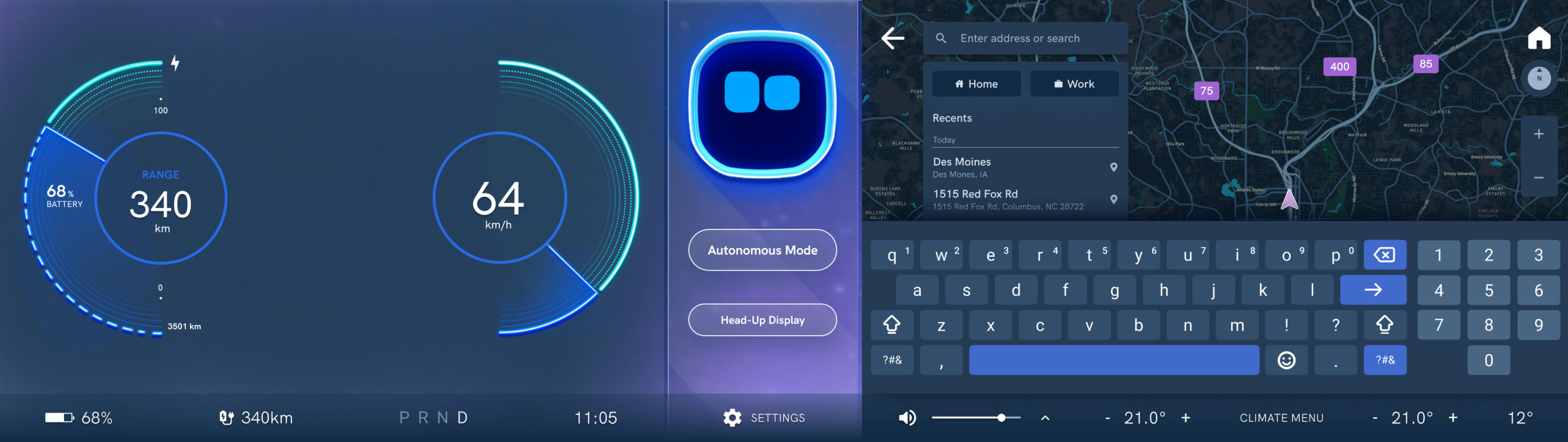

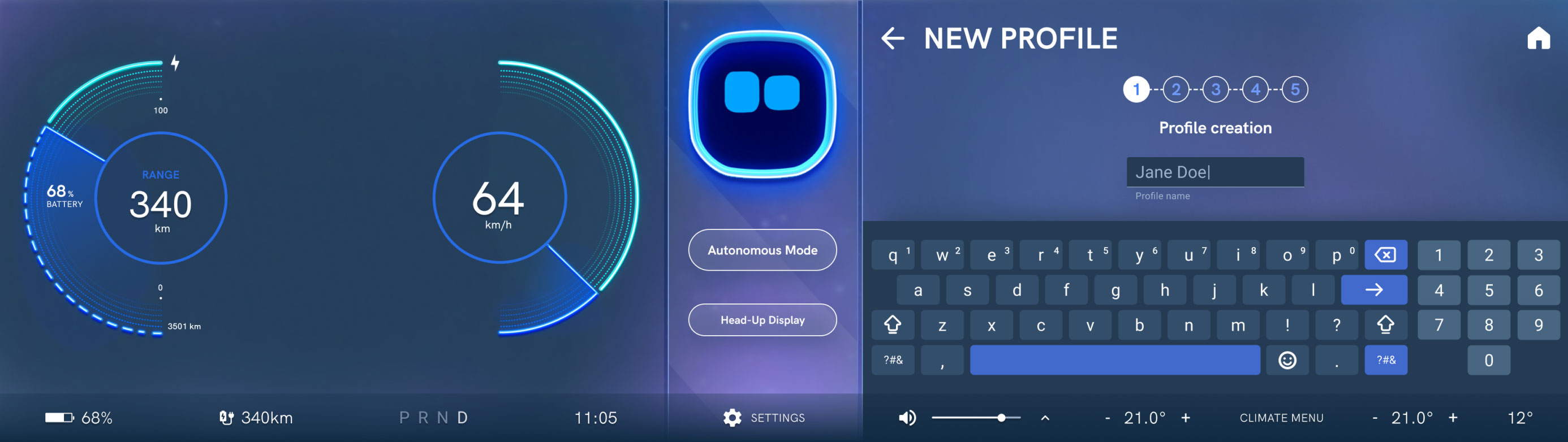

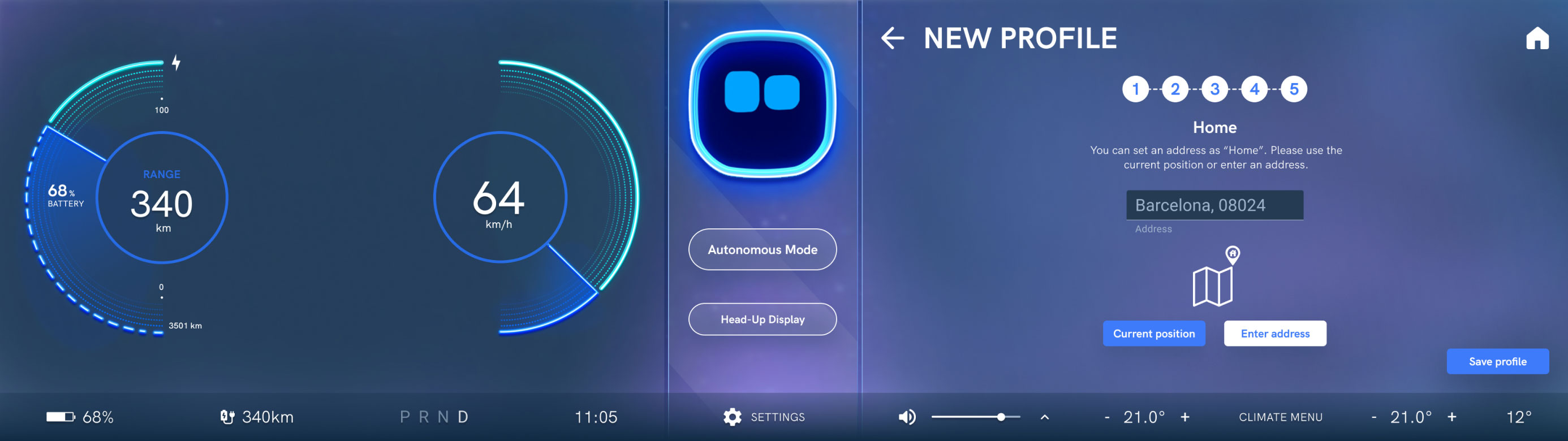

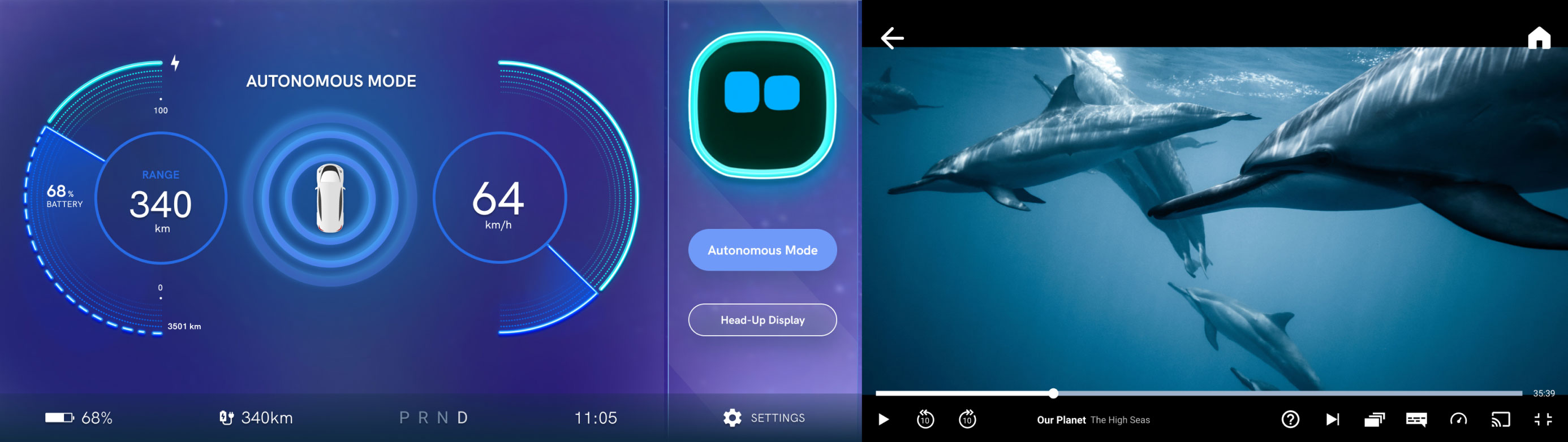

High-fidelity prototype

High-fidelity wireframes

4.0. Discover

We used a framework consisting of 4 phases which allowed us to map out and organize our thoughts in order to improve the creative process.

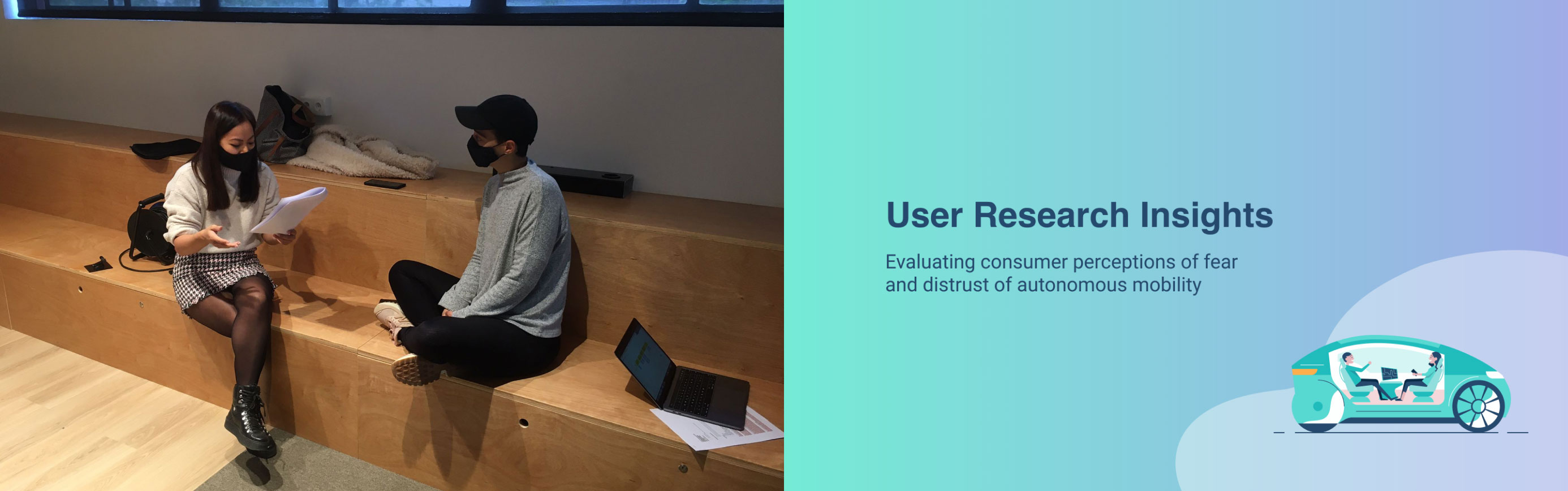

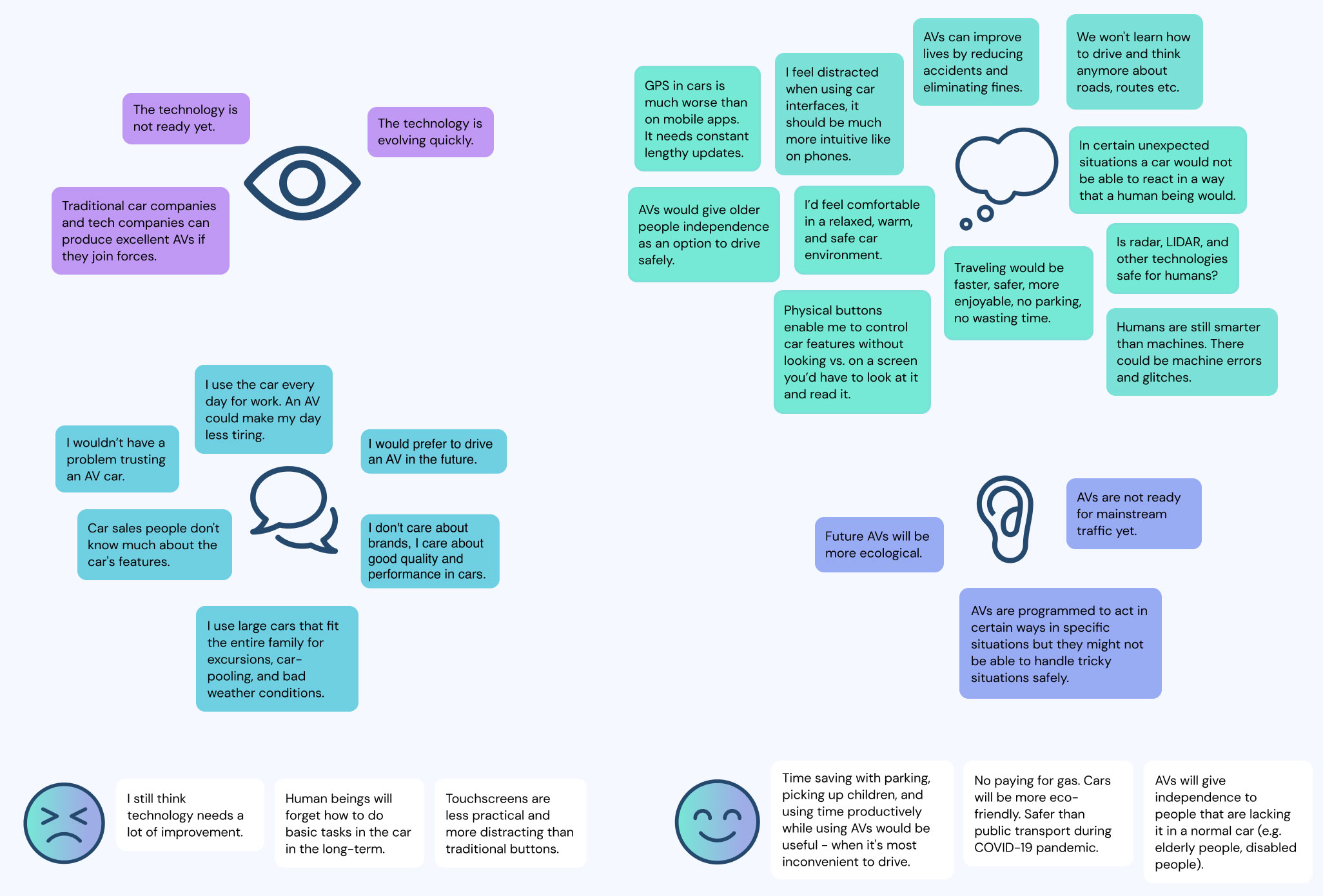

By conducting exploratory desk research we discovered that consumers attitudes and perceptions of fear and distrust of autonomous mobility was still a significant barrier to adoption. We met with stakeholders, Gestoos and Aptiv, and validated with them that addressing this challenge was a necessity in the industry to create future car infotainment systems that effectively address these consumer concerns. The insights were compiled into a report as an initial deliverable for them.

We deep dived into these issues by conducting quantitative and qualitative research; in total we surveyed over 130 people and supported this through in-depth interviews conducted with 5 consumers and 2 industry experts. For the research study, we targeted both Generation X and Baby Boomers (people currently aged between 41-75 years) and Generation Z and Millennials (people currently aged 25-40 years) who drive or ride in cars.

Our goals for the study:

-To understand, in-depth, consumers’ attitudes and perceptions of fear and distrust of autonomous mobility due to perceived lack of safety in the technology and their perceived loss of control/helplessness.

-To assess other organizations’ responsibilities in accelerating the journey towards a self-driving future.

Infographic: Consumer perceptions of fear and distrust of autonomous mobility

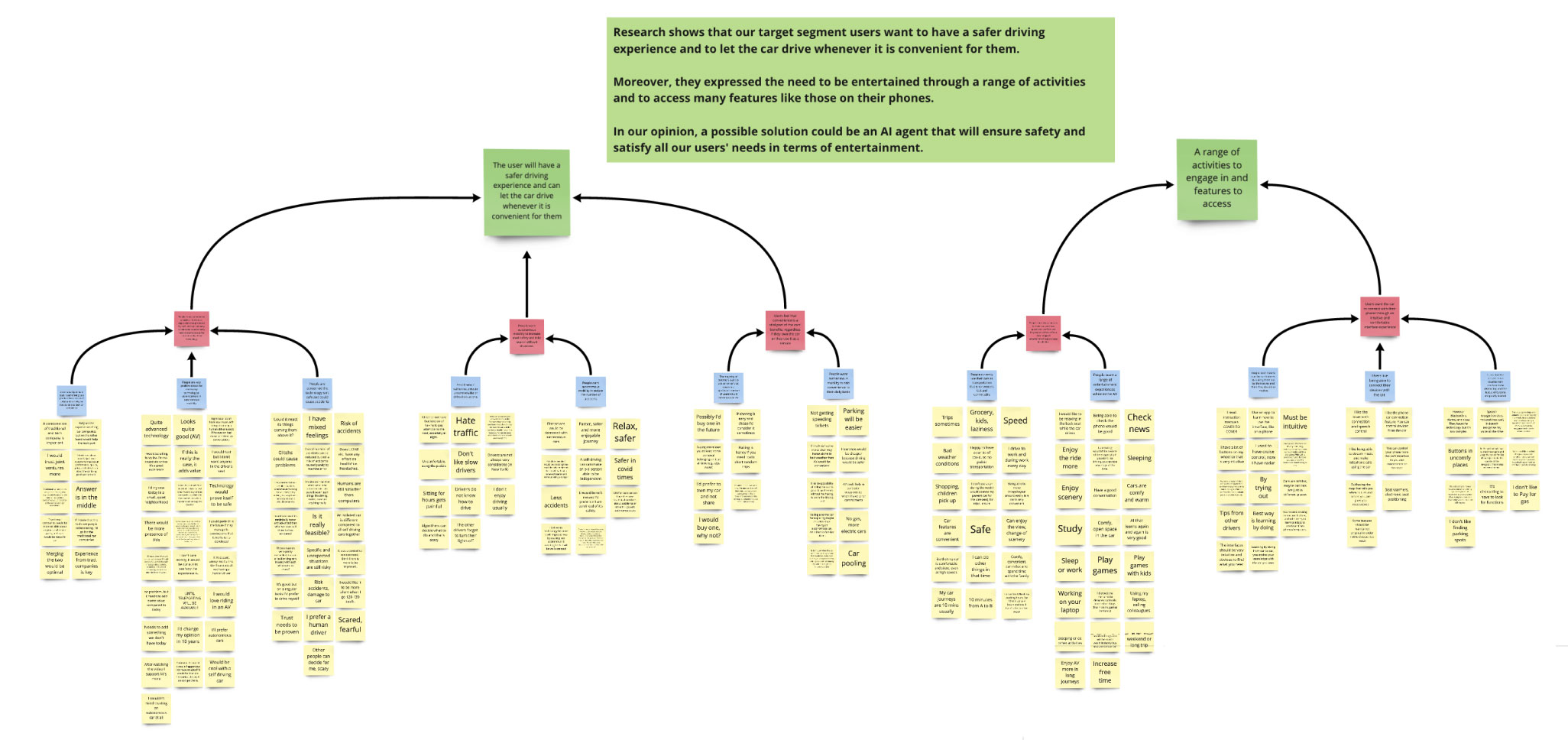

4.1. Define

In this phase was the synthesis of the research through affinity mapping, and definition of our approach.

By organizing our collected user research insights into categories and analyzing them, we defined our approach through 2 “How Might We” questions which address the problems that we uncovered. Later we chose to focus on the first HMW and discarded the second.

How might we communicate the safety of autonomous vehicles to potential consumers?

How might we provide a personalised experience in an autonomous vehicle?

Target users

We decided to narrow down the scope of our target users and redefined them to only the Generation X/Baby Boomer group, to focus on their set of needs for our solution. We discovered that the issues in trusting autonomous vehicles were currently a more crucial problem to solve for users, which could lead to a solution that has the potential of offering valuable enrichment to peoples’ lives.

Scope and constraints

We also considered the problem statement concerning the Generation X/Baby Boomer target users to be more viable than a solution based on the entertainment experience targeting Millennials/Generation Z, as the scope of the project was a 2030 conceptual infotainment system and without ease of access to functional advanced entertainment technology. On the other hand, Gestoos already had developed some functional interactive technology which could enable us to build relevant use cases based on promoting trust in the Generation X/Baby Boomer target group.

We successfully validated our identified scope, target users and problem statement with Gestoos, Aptiv and our expert advisors before proceeding.

Synthesize insights

After sorting through all of our collected data, we conducted empathy mapping to help us better understand our target users and reveal deeper insights.

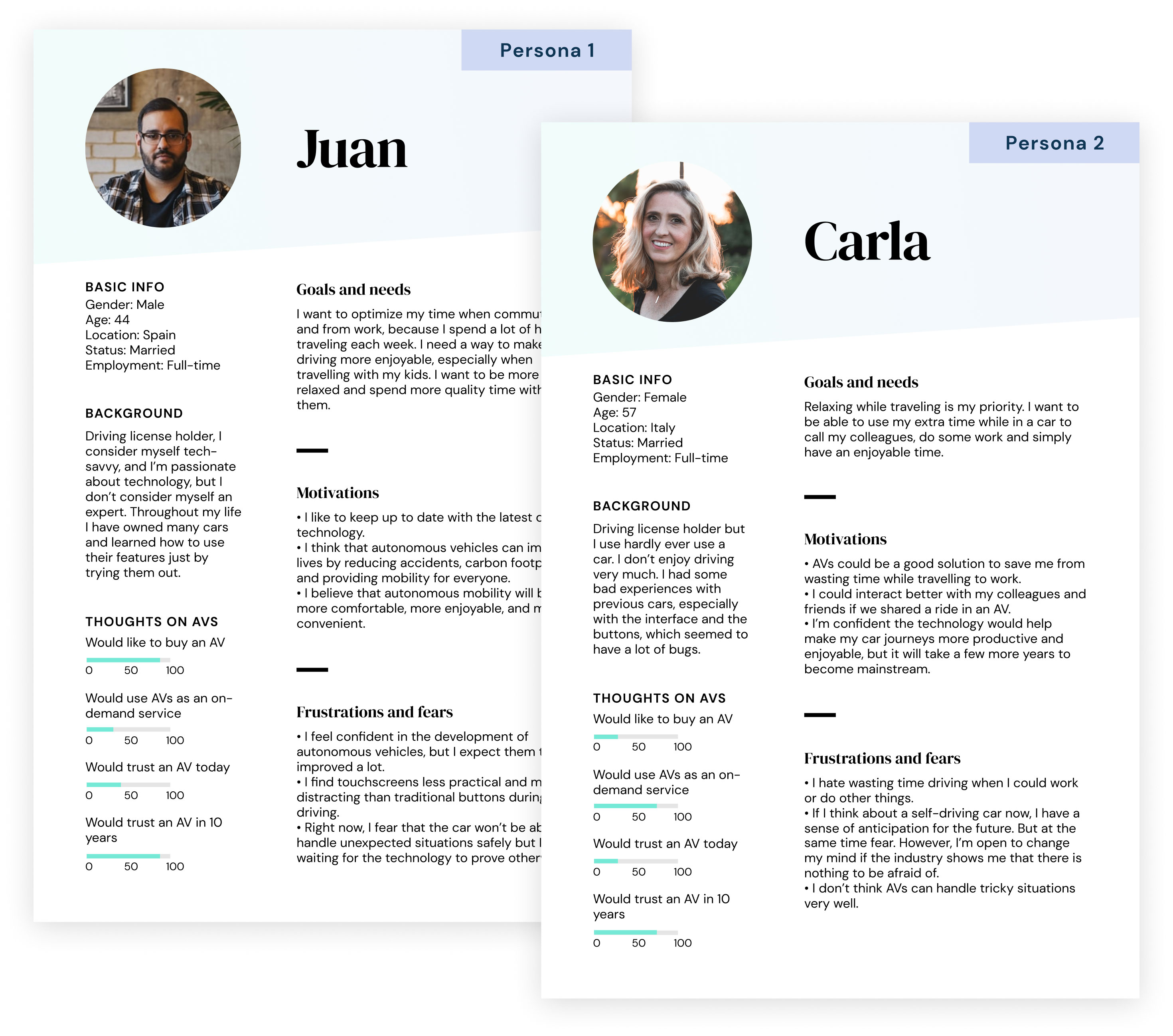

User personas

With the information from the empathy mapping, we created user personas to focus on what our target user wants from our solution. Our personas are Gen X/Boomers and both want to optimise their time in the car. On one hand, they fear that current autonomous mobility technology is incapable of handling unexpected situations, but on the other hand they expect that in 10 years the technology will prove that it can be trusted, so they are open to a potential improvement to their current driving experience.

For Juan, our solution required an interface that was easy and intuitive to interact with as well as promoting a safer driving experience. For Carla, our solution needed to show seamless interactions to gain her trust and demonstrate the freedom to spend that extra time disconnecting or enjoying some entertainment.

4.2. Develop

In this phase, we ideated, evaluated and developed our solution.

Competitor usability testing

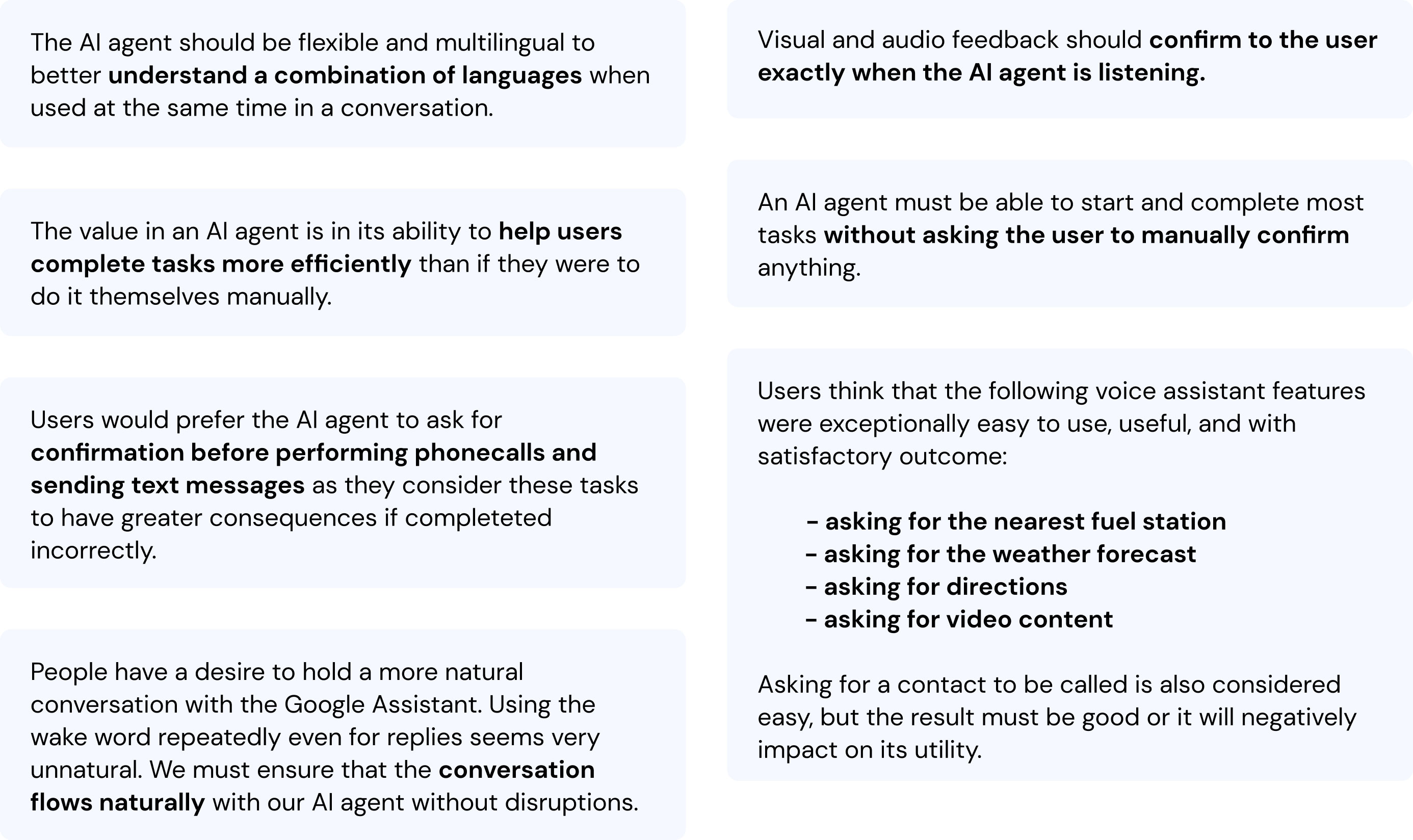

We conducted usability testing of a competitor product to understand pain points and gain insights that would help us to develop our solution.

As the current state of car infotainment technology we are designing for is still in development, we decided to conduct usability testing with 3 users using the Google Assistant on mobile devices, for valuable insights on the voice assistant.

We asked our participants to complete a total of eight tasks of varying complexity using Google Assistant by voice only and this enabled us to gather some important insights to consider when developing features for our AI agent. This also helped us enter ideation with a more holistic understanding of AI voice assistants and possible opportunity areas for our product.

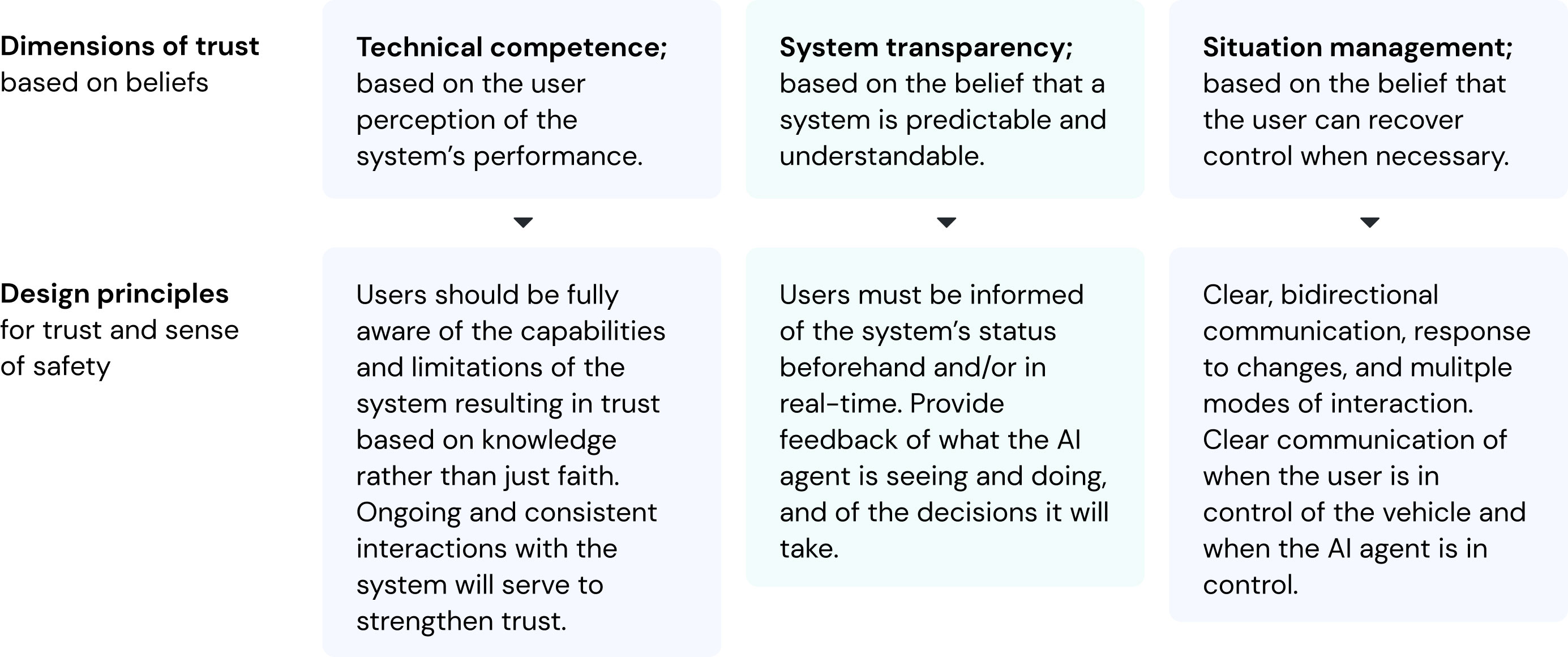

Design principles

Establishing trust, confidence and communicating safety is our most important user experience goal

It was essential to incorporate trust elements into our design principles and that they form the basis of our features. We first broke down the dimensions of trust based on 3 beliefs, and then we made design decisions that would reflect these principles.

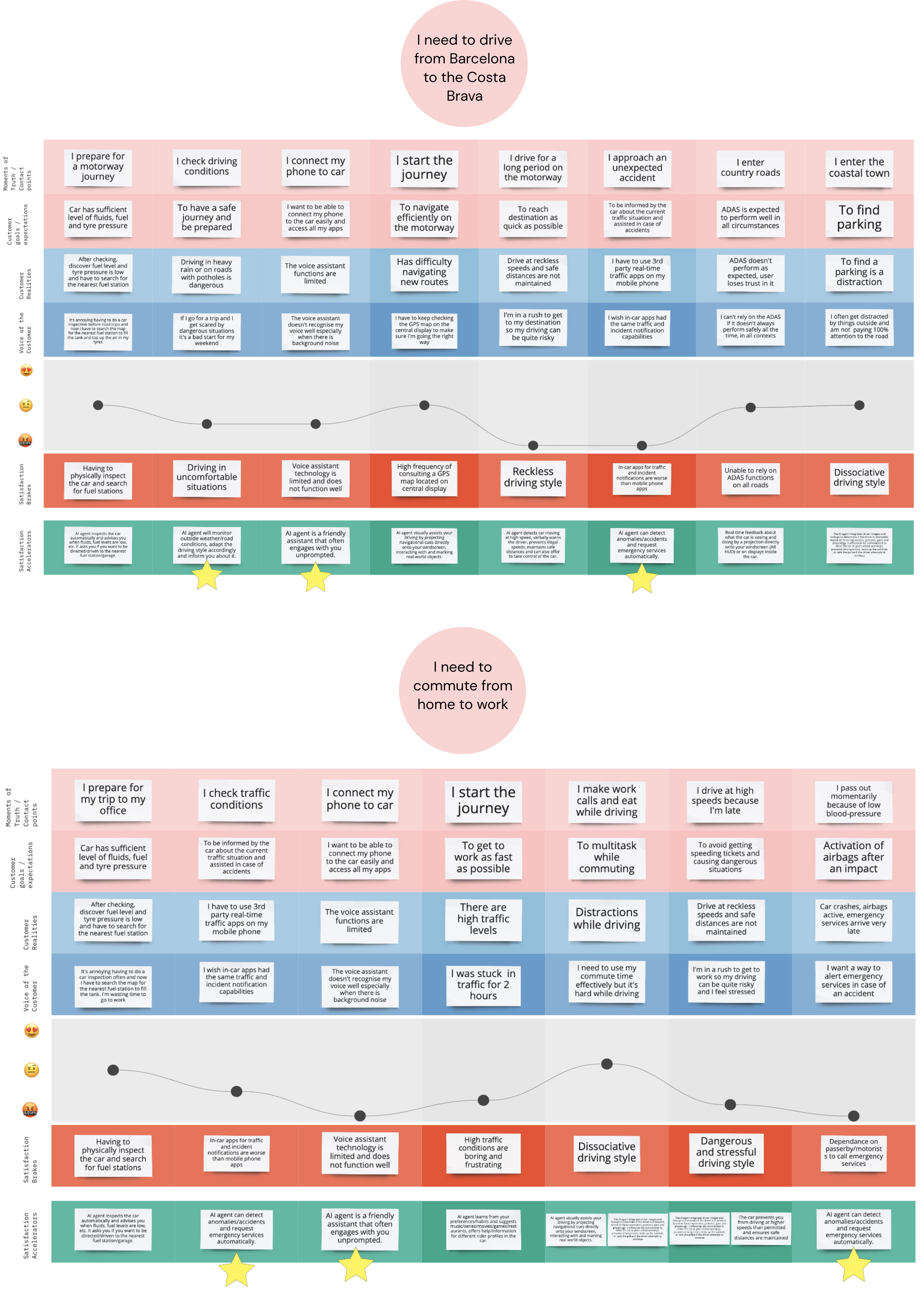

Customer journey mapping

We carried out customer journey mapping exercises with users from our target segment, to understand their goals and actions during typical car journeys.

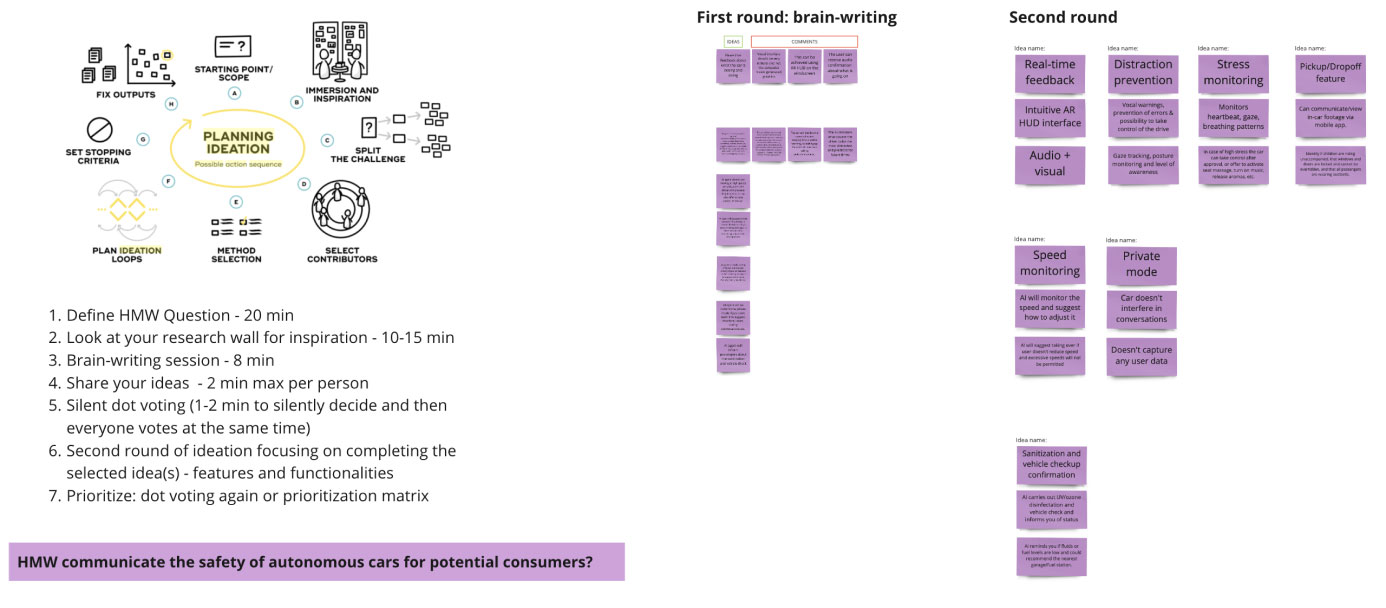

Ideation

The following step was to conduct a brainwriting session and develop an initial feature list for our minimum viable product.

Card sorting

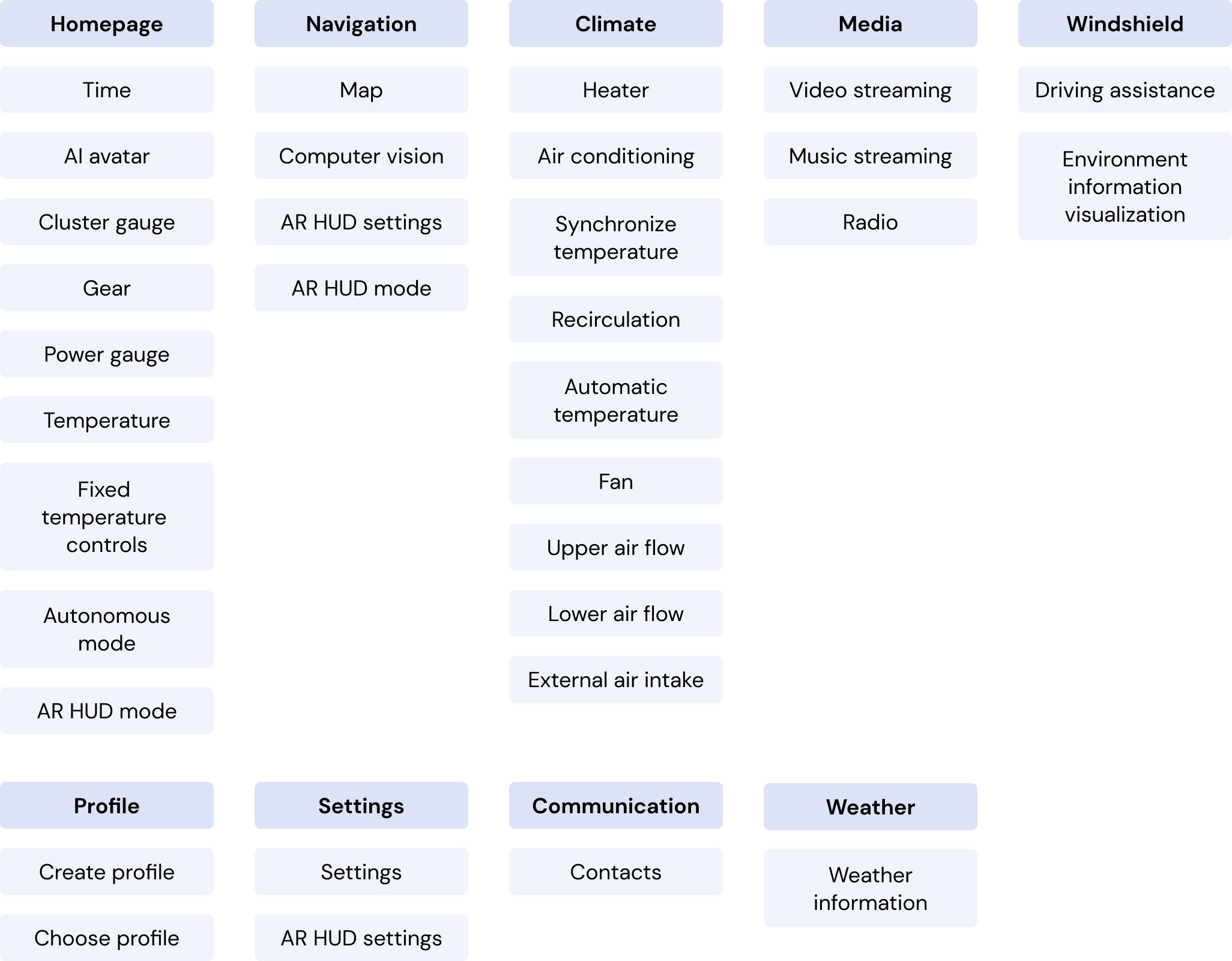

We created several iterations of the MVP features, and we ran a card sorting session with users to categorize the AI agent’s features according to priority. This was conducted in order to better understand their perception and to guide us in the definition of features for our MVP.

We ran our card sorting sessions in an unmoderated setting with 10 participants online. The participants were able to read cards for 18 features and were instructed to sort them according to high priority, medium priority, and low priority.

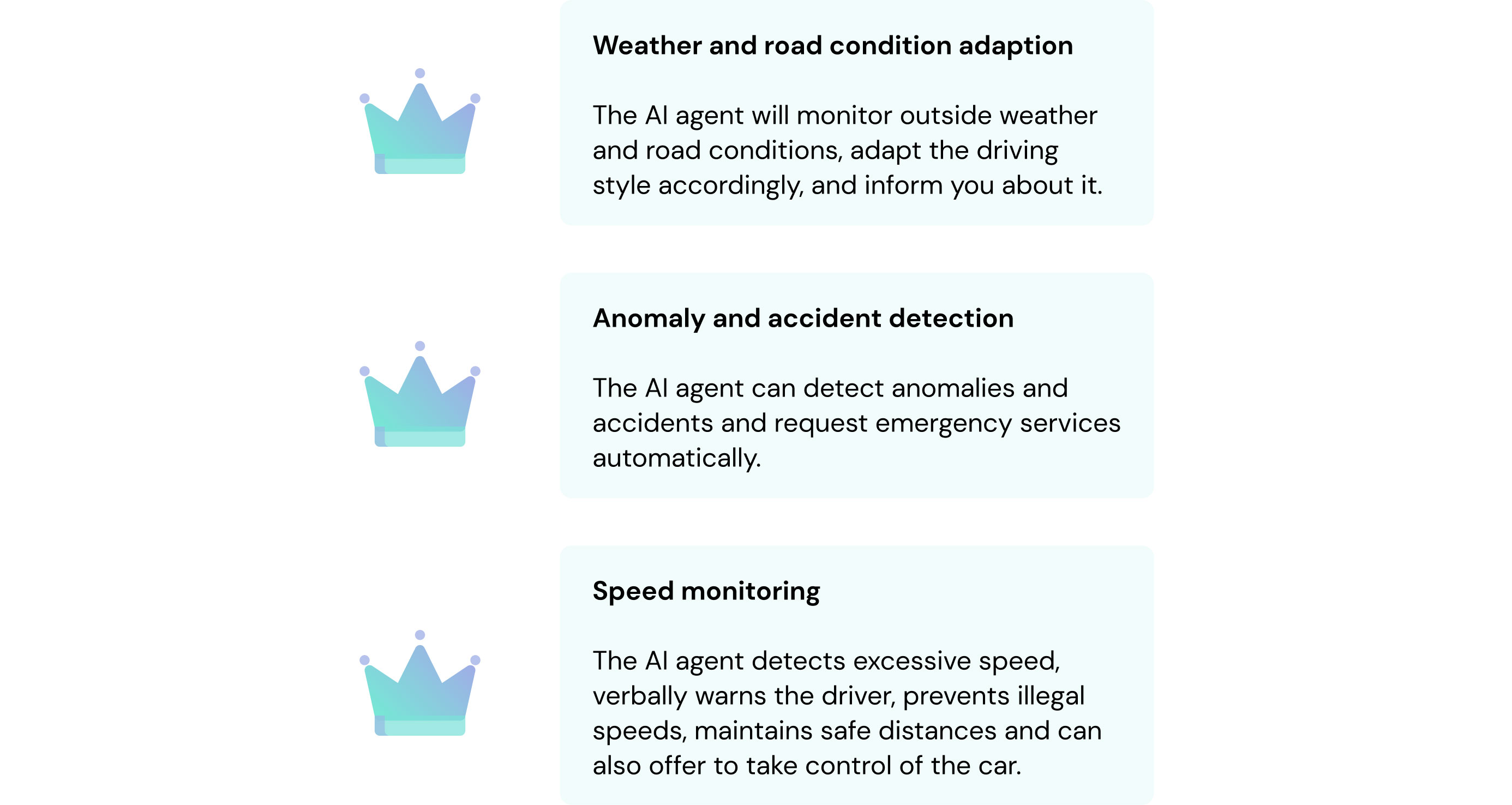

MVP features

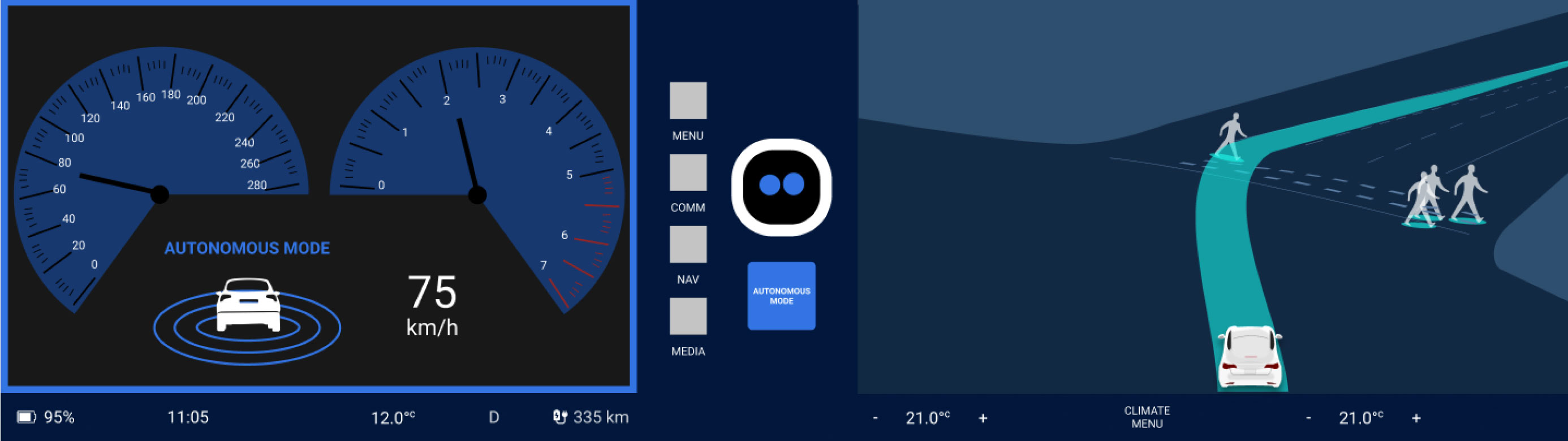

To promote trust, we added emotional design to our AI agent. It is the endearing element of the experience that conveys empathy and is designed to have a friendly personality. The human-like interaction enhances the overall user perception of trust.

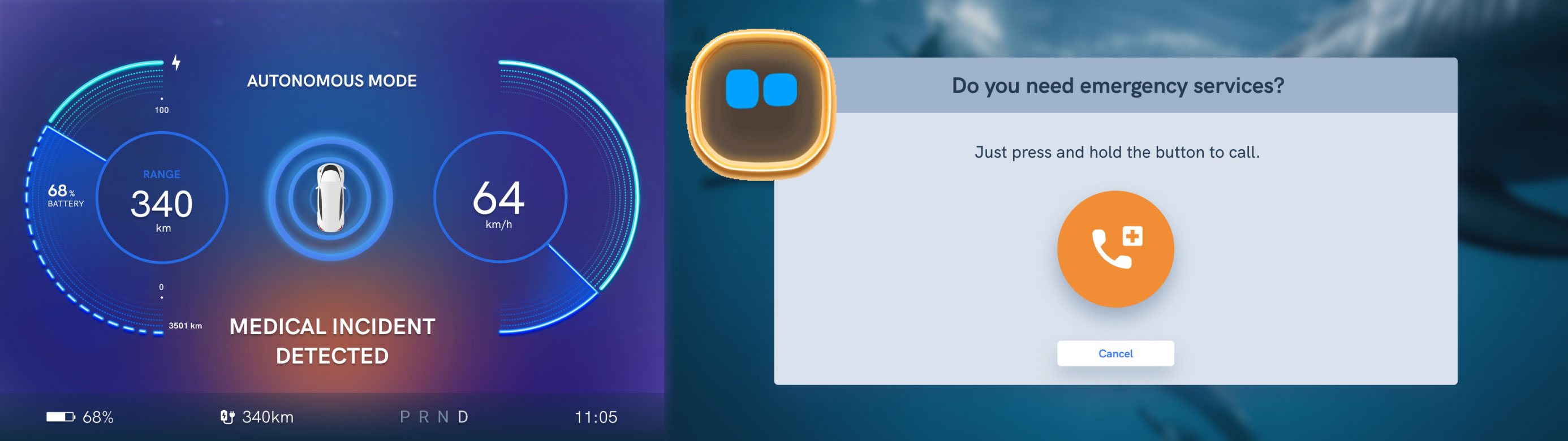

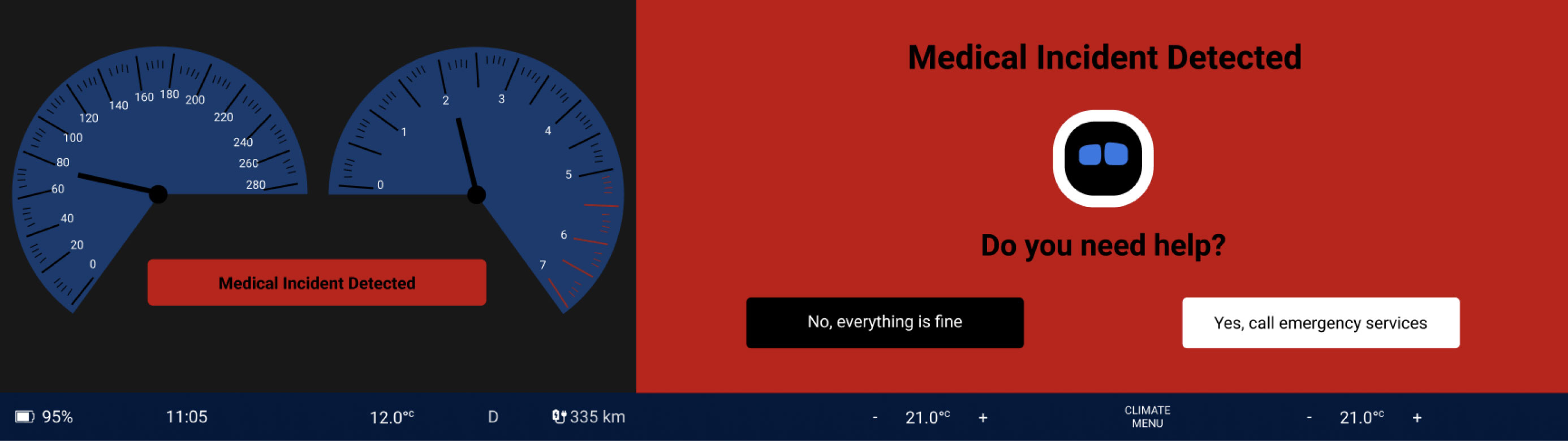

To provide safety, the AI agent recognises and informs the user of weather and road conditions. It also recognises if the user is having a medical emergency while in the car and can request emergency services on their behalf.

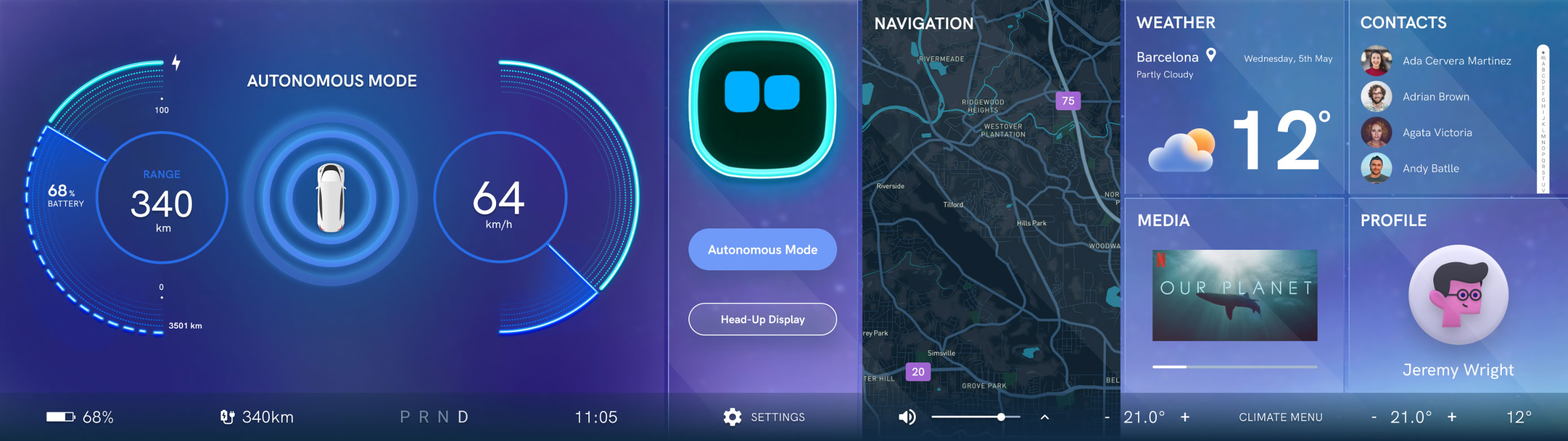

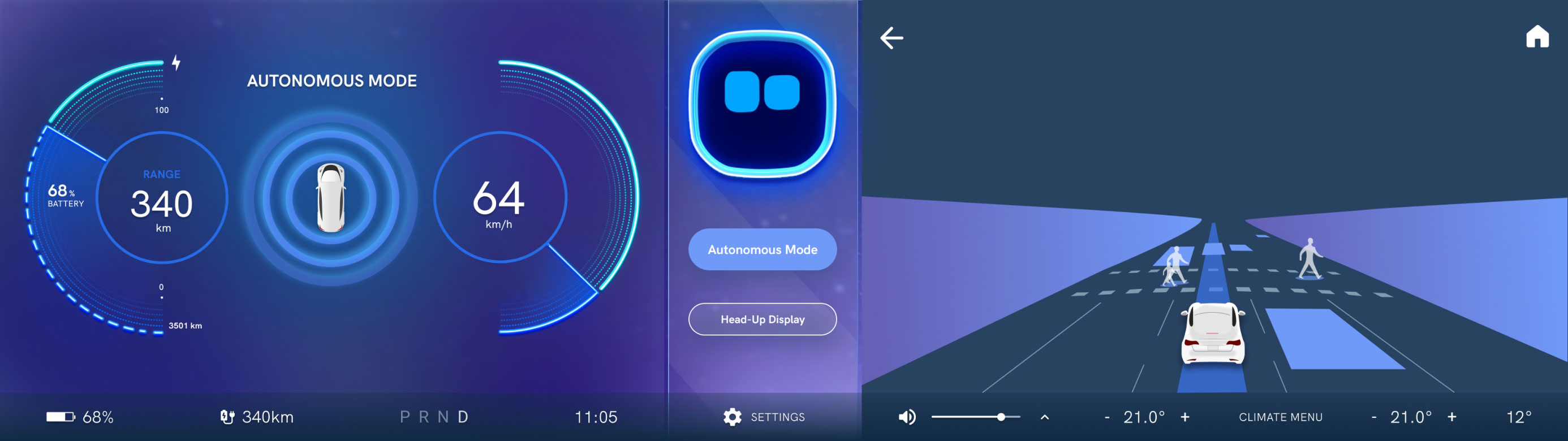

Regarding interactions, we created an infotainment system that the user can interact with via voice and gestures. And when the user interacts using gestures to point to their immediate surroundings, augmented reality graphics are projected onto the windscreen of the vehicle to provide useful information.

Use cases

We planned the use cases that would form our value proposition hypothesis as our first prototype and I created an illustrated storyboard depicting the customer journey.

Test hypothesis

The objective of our test hypothesis was to better understand how people perceive trust and safety while being driven by a fully autonomous car that interacts with passengers via an AI assistant using voice and gestures, and to improve their perceptions through our experience design.

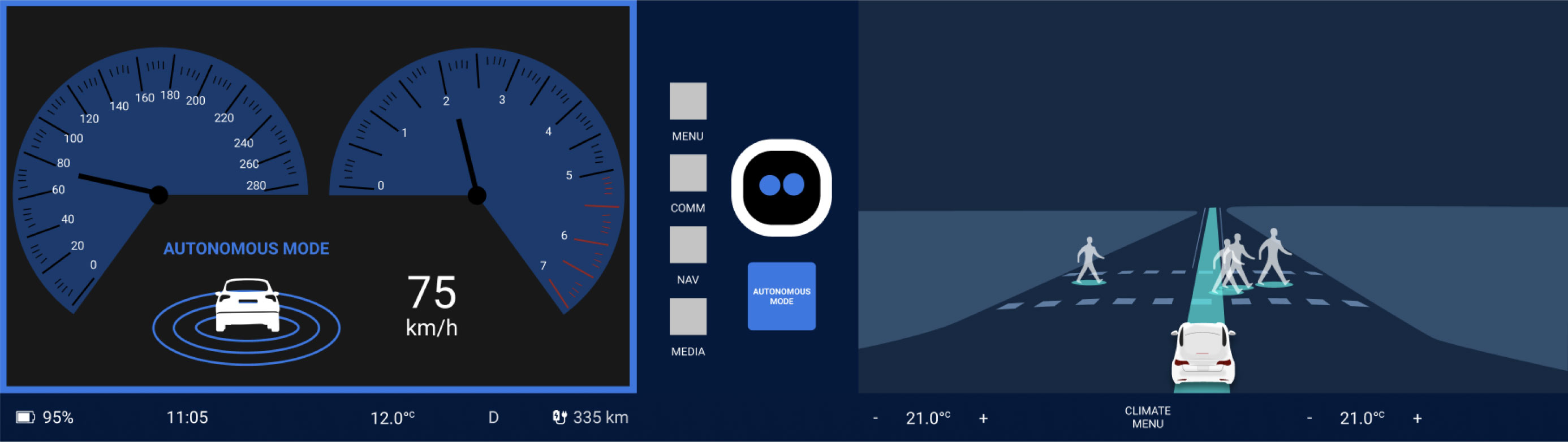

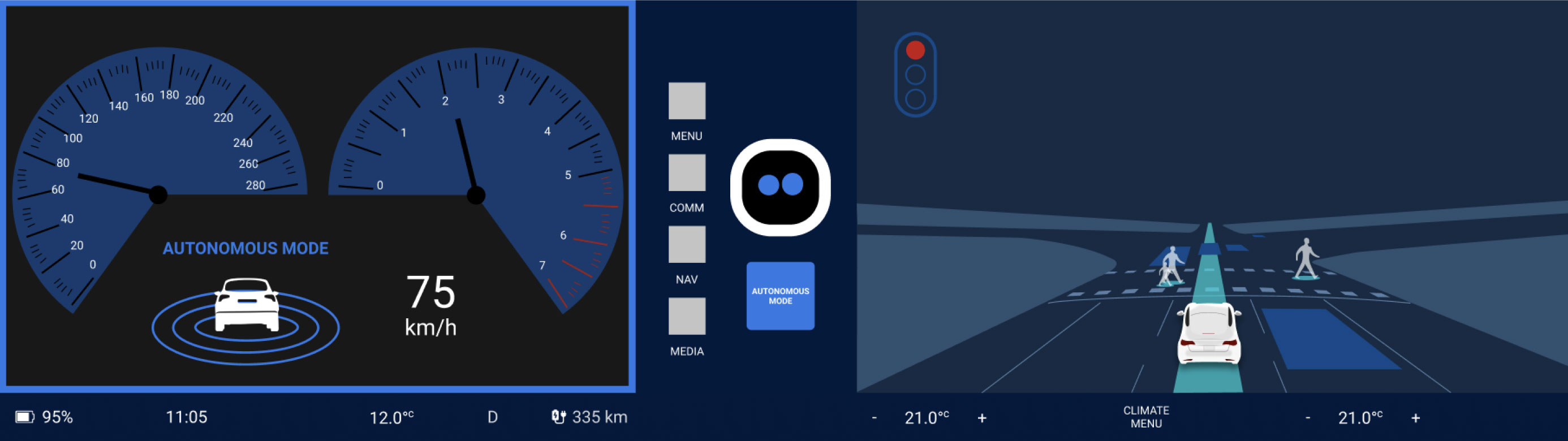

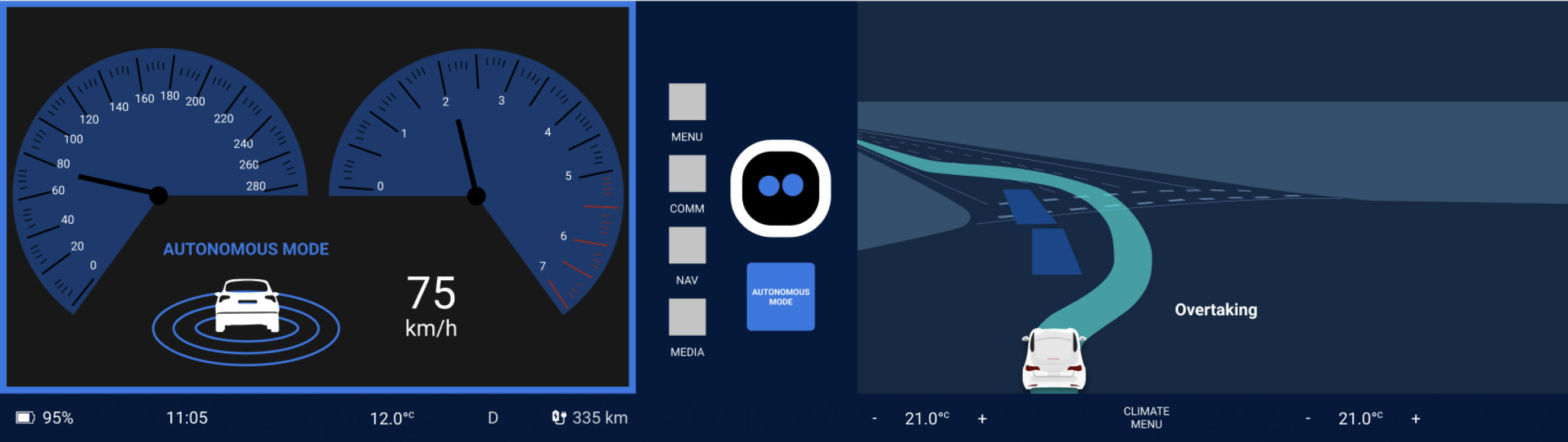

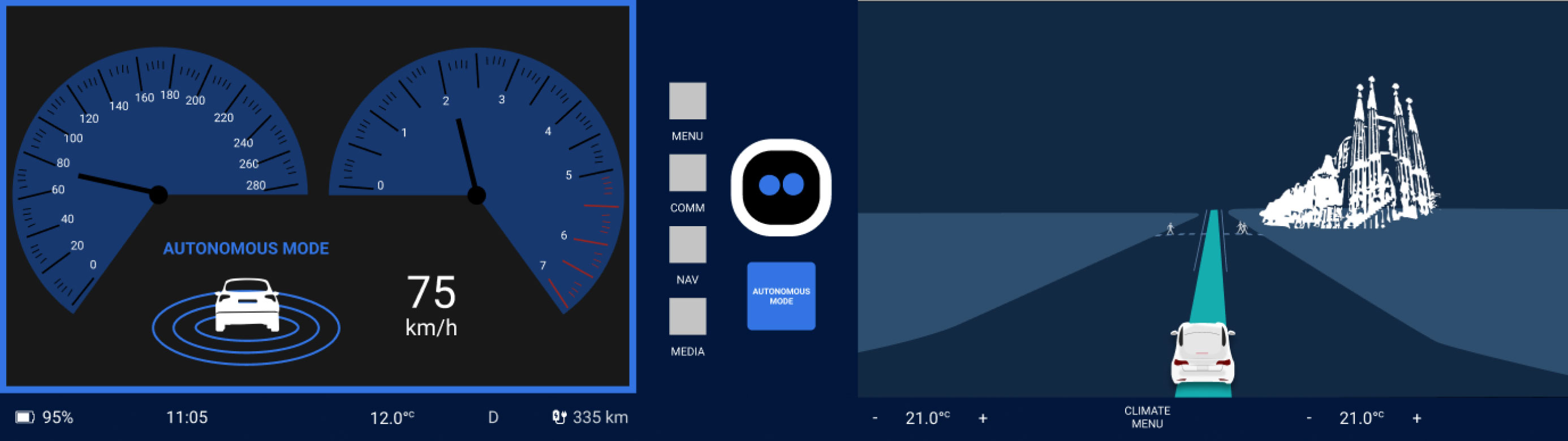

We created a “Wizard of Oz” experiment simulating the experience of being a passenger in a self-driving car. To create the experience I filmed footage inside a real car during a 5 minute route in a Barcelona residential area and including critical incidents: navigating a pedestrian-heavy intersection, navigating traffic lights and overtaking stopped cars. We later edited the footage to add an effect of heavy rain and the sound of ambulance sirens, and we divided the journey into 4 tasks for users to complete during the experience.

In collaboration with Gestoos, we integrated their gesture technology for the augmented environment use case. This functional technology of gesture recognition activates a simulated projection of information onto the windscreen when the user makes a pointing gesture.

In a lab environment set up at Gestoos, the simulated car journey was displayed on a large monitor that acted as the vehicle windscreen, with a camera above to register the pointing gestures. The user sat behind a steering wheel and had a tablet on which to record all their feedback, and we had a smaller monitor to the right, displaying the infotainment system. Our AI assistant was simulated via speaker by one member of the team in remote, from Zurich.

Testing process

Low/Mid-fidelity prototype

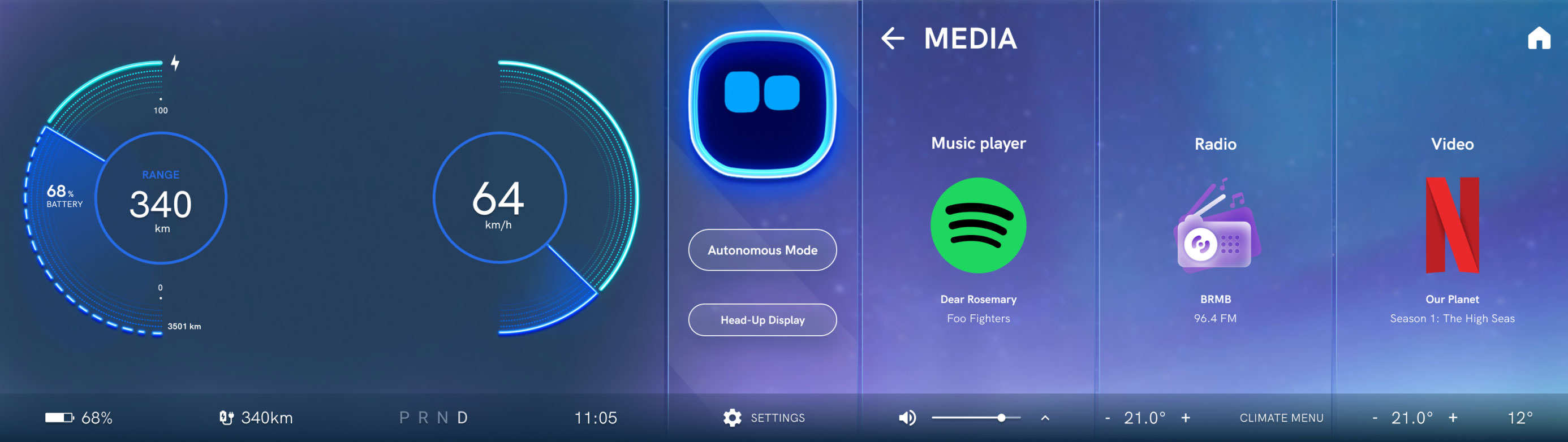

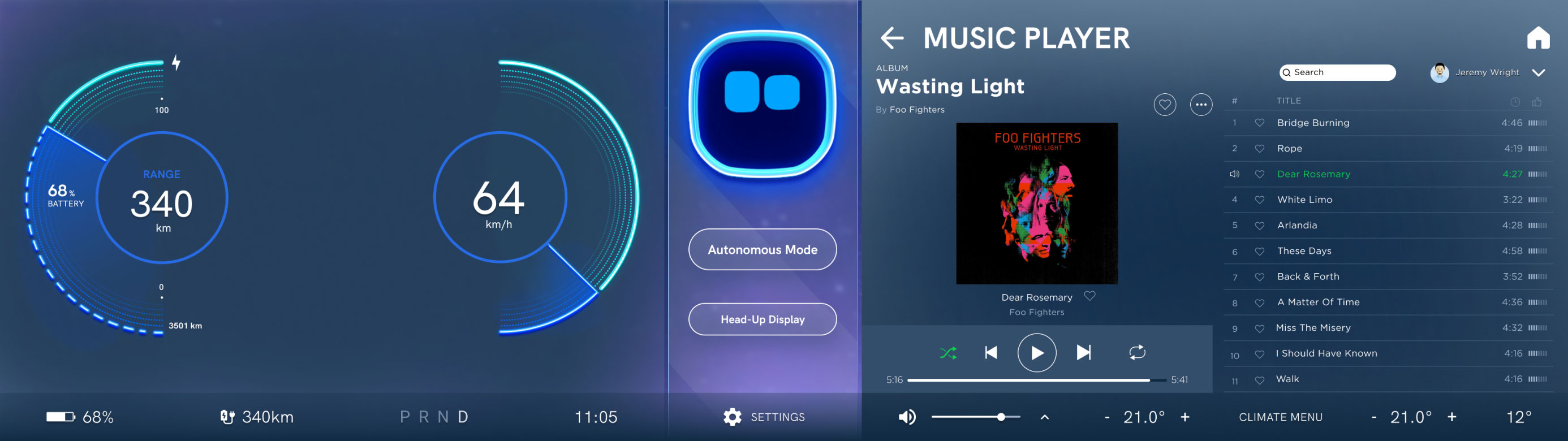

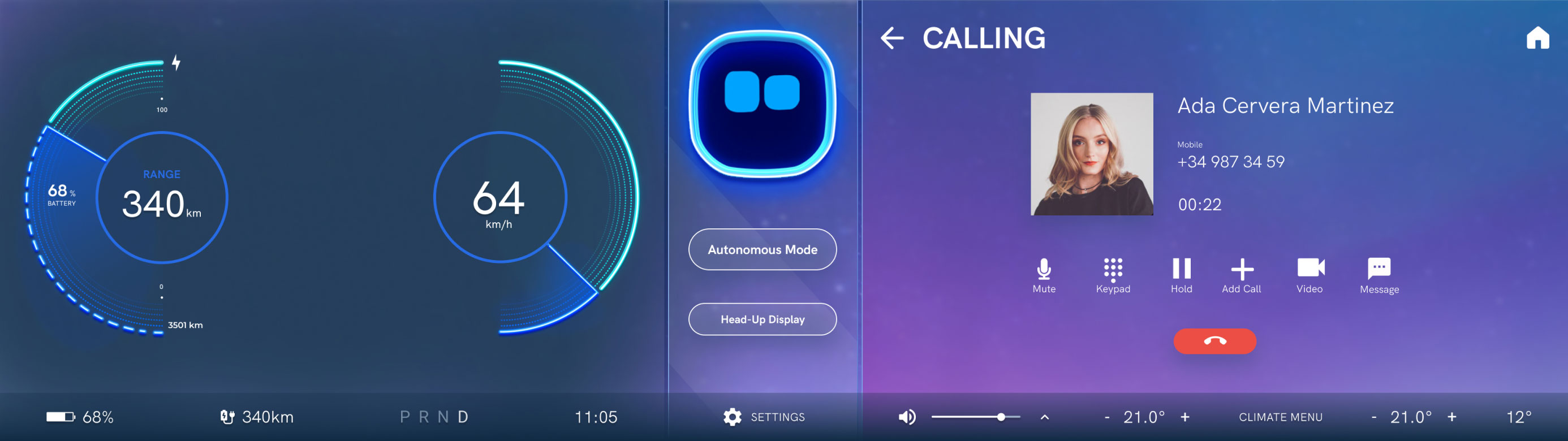

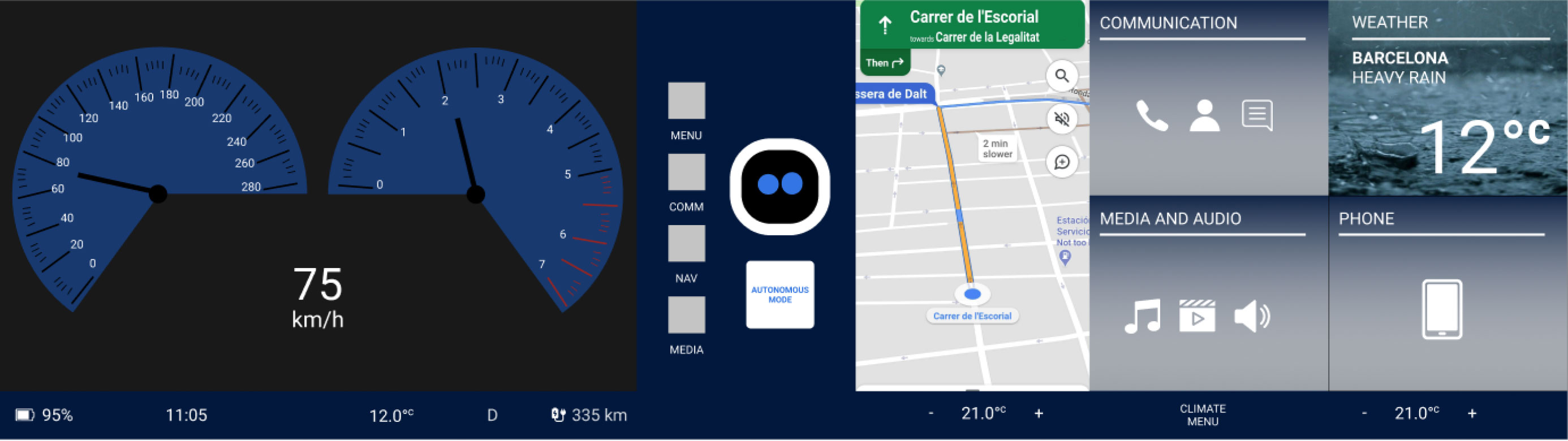

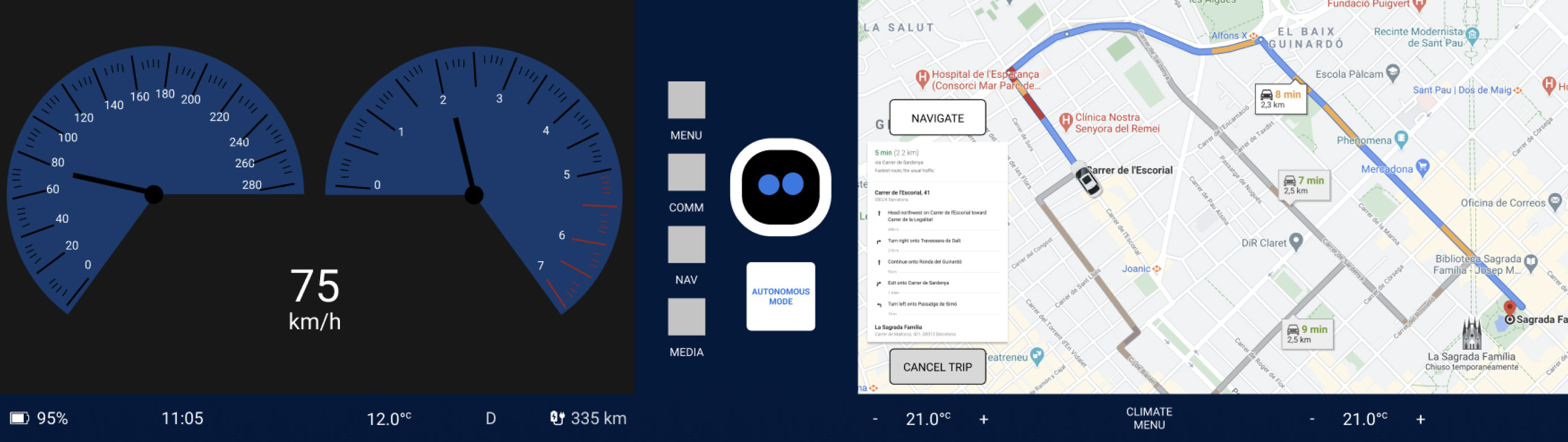

We conducted an ideation session, collecting sources of inspiring products, services, and experiences. Based on our research on the main use cases for infotainment systems; navigation, listening to music and making telephone calls, we created screens with a ratio aspect of 32:9, designing for a future concept infotainment system utilizing a greater surface area than the traditional infotainment screen.

We decided to create a low/mid-fidelity prototype for our infotainment system with some colour and visual features because it was important that we provided sufficient visual design clues to help the user understand the prototype and to better simulate the experience of a realistic in-car journey.

Low/Mid-fidelity wireframes

Test hypothesis insights

Despite all the lab set-up, a lot of the participants stated at the end of the study that they felt they really were in a self-driving vehicle and watching how it drives, so the simulation method was quite effective.

Later, I presented our progress and key test insights to an audience of 20 people from Aptiv and Gestoos.

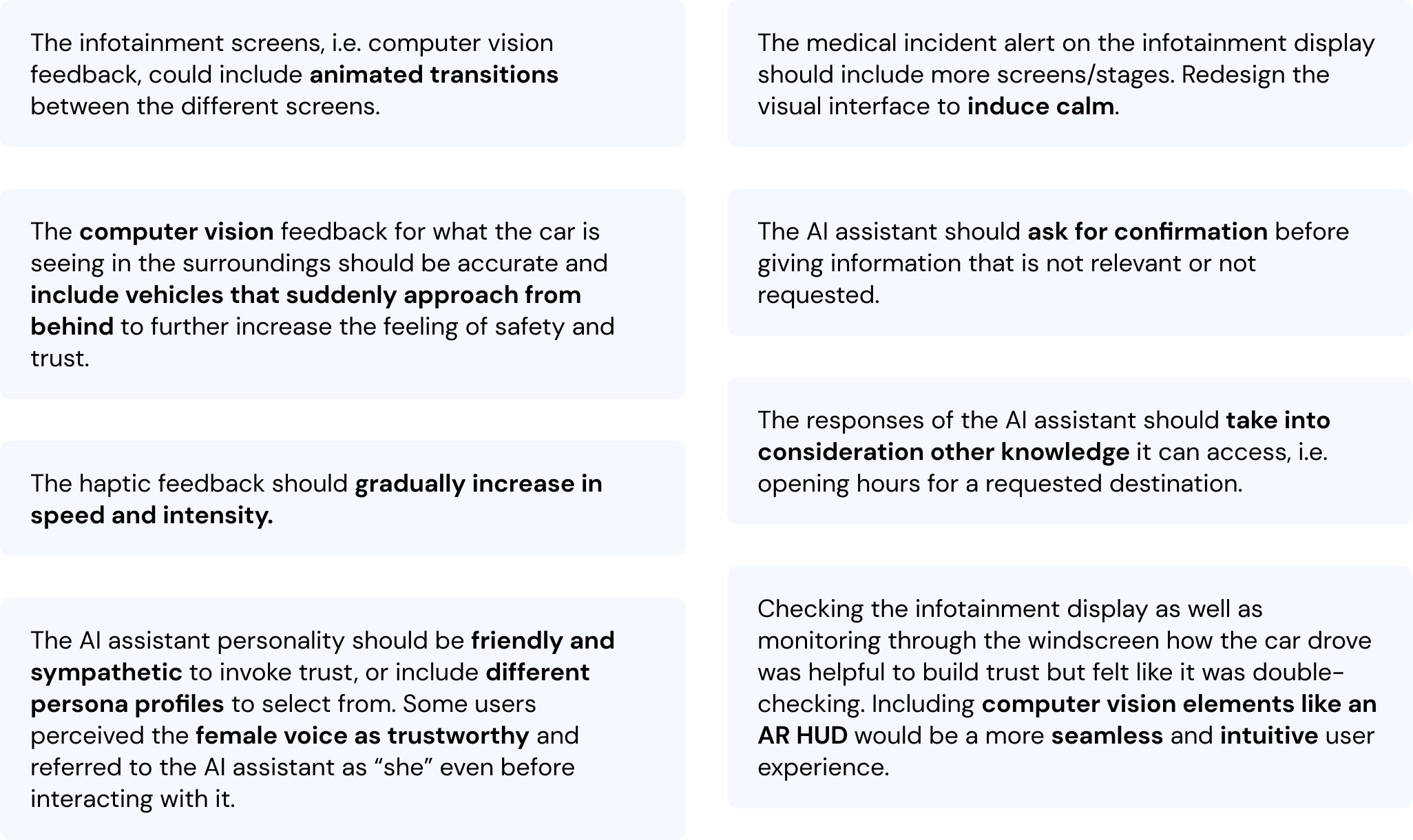

Design considerations for next iteration

Business proposal

As part of the project brief, we were also tasked to create a business proposal. For this purpose, we created the following:

-Business model canvas

-Product roadmap

-SWOT analysis (strengths, weaknesses, opportunities and threats)

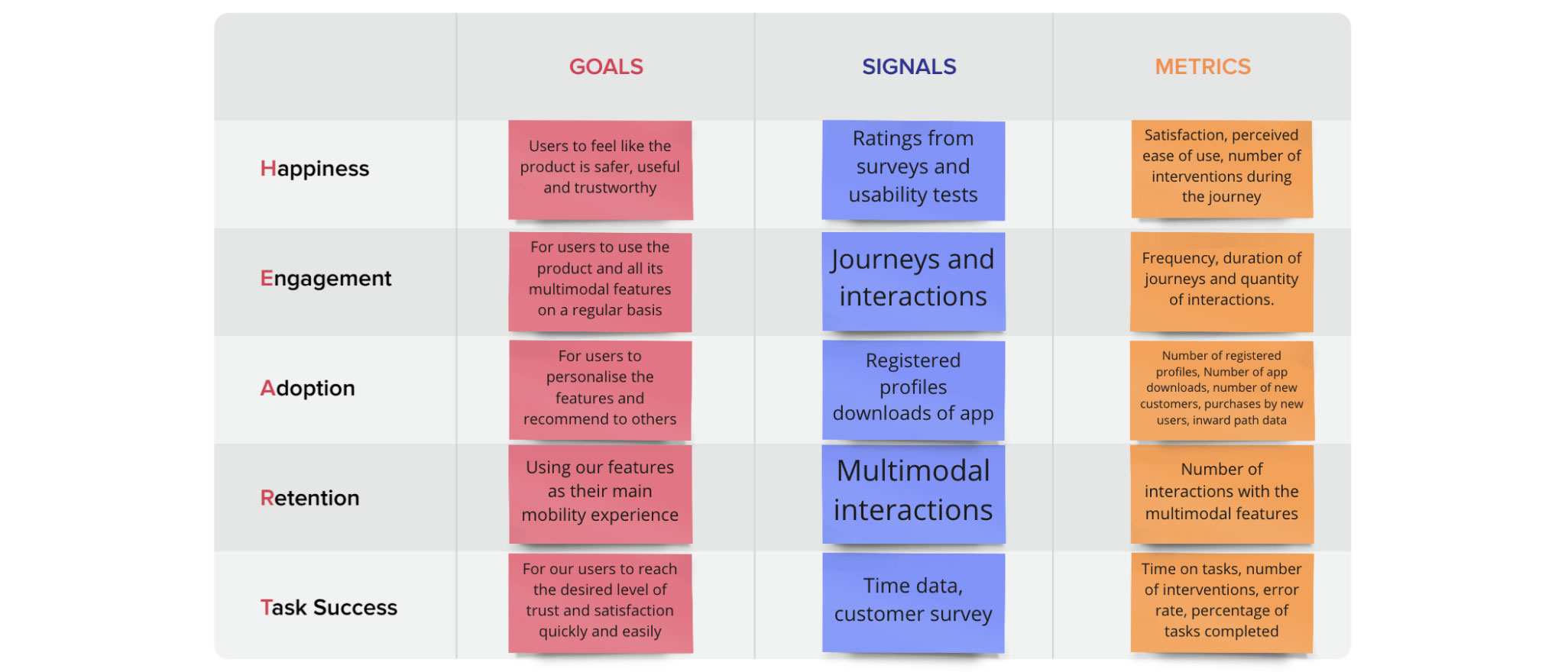

-Google HEART framework to set UX goals

Benchmarking

We conducted benchmarking to see how our solution would compare with other companies in the industry. As it would be a new product, we would have to develop the reputation that legacy companies such as Mercedes Benz and BMW have already achieved. However, in comparison, the ARA solution would have more interaction features to offer. Other companies offer multimodal interaction, for example, Mercedes Benz includes voice, touch, gaze, and gestures but does not have an AI agent avatar. NIO is the only company that does offer a human-like AI agent, however, is lacking in gaze and gesture interactions.

Information architecture

After validating that our experience design improved user perception of safety and increased their level of trust in the autonomous vehicle technology, we conducted a second card sorting session with target users, this time to define categories for the information architecture.

Low-fidelity usability testing

We tested the low-fidelity prototype infotainment interface with 5 users, in order to gain insights on how to move forward and iterate on the design for the high-fidelity prototype.

4.3. Deliver

In this phase, we developed and tested our high fidelity prototype, which was an iteration of the first prototype with final design graphics and content. A collaboration was initiated with the company, German Autolabs to discuss integration with their voice assistant technology.

User interface design

I created the style tile which conceptualized the visual direction for our high-fidelity design. Taking inspiration from the Aurora Borealis, I wanted to portray a modern, clean, and pleasant visual experience, utilizing a blue toned palette to promote calmness and trust. I also decided to use glassmorphism techniques, seen in some icons and for the menus to create an attractive and minimalistic yet intuitive interface for users, and we chose HK Grotesk typeface as it is friendly and distinguishable.

With inspiration from Pixar, I created an avatar to be the face of the AI agent, showing expressions, creating an emotional connection with the user and inspiring trust using animation, sound, and colour. The avatar is referred to as ARA but our intention is that it can be adapted, including the infotainment welcome screen and interface colours, to the branding of any car company. The AI assistant wake word could also be personalised. I also created a neutral state animation using blue and when ARA is talking, it turns a lighter shade. There is also a medical emergency version where it turns orange and adopts a worried expression.

For the final, high fidelity prototype we repeated some of the previous customer journey use cases but this time with improved interactions and improved functioning gesture technology. We added two new use cases: whilst the car is in autonomous mode, it performs an emergency stop and secondly, the user navigates to Netflix.

Digital marketing campaign

The future of (not) driving

As part of the project brief, we were also tasked to create a digital marketing strategy and campaign plan. For this purpose, we made a marketing video for which I created all the animation and graphics.

5.0. Next steps

This was a 6-month conceptual project and I believe that we were able to create a great solution in that time, addressing the challenge of improving user perceptions of safety and trust in the self-driving car technology with great success.

It was a fantastic achievement to be able to integrate functioning gesture technology in the solution and if time allowed, my strategic goal for the continuation of the project would have been to continue developing it to achieve an industry level multimodal infotainment system. This means integrating functional technology of the voice assistant of German Autolabs, gaze detection and touch technology of Gestoos, functioning computer vision and augmented reality projections.

Furthermore, as a result of working on this project I now have great passion and interest in developing user experience in the automotive industry and would love the opportunity to continue working in this exciting field.